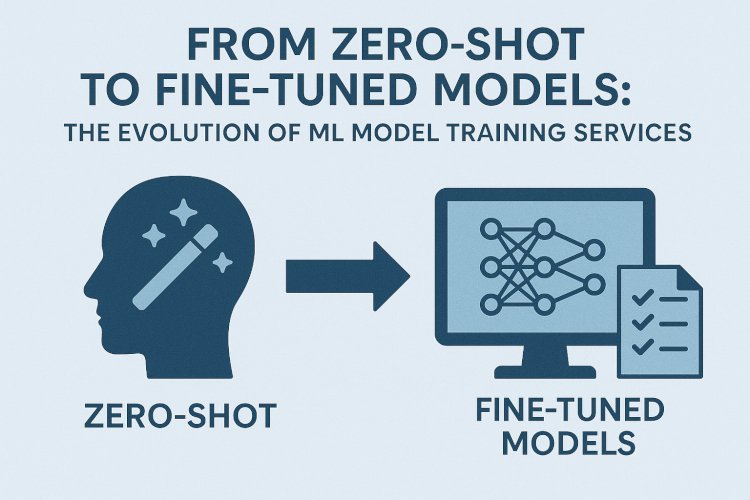

From Zero-Shot to Fine-Tuned Models: The Evolution of ML Model Training Services

The field of machine learning has undergone a significant transformation—from building models from scratch to leveraging powerful pre-trained models through zero-shot, few-shot, and fine-tuning techniques. This evolution has not only accelerated development cycles but also increased the accessibility of AI for businesses across industries.

At the heart of this transformation are machine learning development services, which help organizations choose the right training strategy, optimize performance, and scale models efficiently. In this blog, we explore how model training has evolved and how modern services facilitate this progression.

Understanding Zero-Shot Learning

Zero-shot learning (ZSL) is a technique where a model performs tasks it was not explicitly trained on. Instead of retraining for every new task, the model generalizes using learned representations.

Example:

A language model like GPT can answer questions, write summaries, or translate languages without any task-specific training—a clear demonstration of zero-shot capabilities.

Advantages:

- No task-specific labeled data required

- Quick deployment for new use cases

- Cost-effective for exploratory scenarios

Limitations:

- Lower accuracy on domain-specific or nuanced tasks

- Susceptibility to hallucinations or factual errors

Modern machine learning development services often integrate zero-shot models as part of rapid prototyping workflows, allowing clients to validate ideas before committing to expensive training phases.

Few-Shot and Transfer Learning: The Middle Ground

Few-shot learning bridges the gap by training models with a very small number of labeled examples. Transfer learning, a related concept, fine-tunes a pre-trained model on a smaller, domain-specific dataset.

How It Works:

- Start with a general-purpose model trained on large-scale data (e.g., BERT, ResNet)

- Feed in task-specific examples (few-shot) or fine-tune with a modest dataset

Tools & Libraries:

- Hugging Face Transformers

- PyTorch Lightning

- TensorFlow Hub

These techniques are standard in modern machine learning development services, especially when businesses lack vast labeled datasets but require reasonably accurate performance.

Fine-Tuning Models for Domain-Specific Tasks

Fine-tuning is the most tailored approach. It involves continuing the training of a pre-trained model on a large amount of task-specific data. The goal is to make the model highly effective in a particular domain or use case.

Use Cases:

- Healthcare: Fine-tuning BERT models on clinical notes

- Finance: Adapting language models to detect fraud or predict stock movements

- E-commerce: Personalization engines trained on customer behavior data

Benefits of Fine-Tuning:

- High accuracy

- Custom-tailored performance

- Better control over model behavior

Machine learning development services offer fine-tuning pipelines with automated hyperparameter tuning, data augmentation, model versioning, and continuous integration for deployment.

How Machine Learning Development Services Enable This Evolution

Modern machine learning development services offer a comprehensive suite of capabilities to support the full lifecycle of model training and deployment:

- Data Preparation: Involves cleaning, labeling, augmenting, and balancing datasets to ensure high-quality inputs. This step is critical for improving model accuracy and reducing bias.

- Model Selection: Experts help choose the most suitable approach—whether using foundation models (like GPT or BERT), building custom architectures, or deploying ensemble models for better performance.

- Training Infrastructure: Provides access to scalable computing environments using GPUs or TPUs, allowing faster training and experimentation with large models and datasets.

- Monitoring: Implements tools to track model performance in real-time, detect data drift, and monitor accuracy, helping maintain long-term reliability in production environments.

- Compliance: Ensures that AI models are developed and deployed in line with ethical standards, regulatory requirements, and privacy laws, especially critical in sensitive sectors like healthcare and finance.

By outsourcing to specialized machine learning development services, businesses can reduce operational overhead, accelerate time-to-market, and ensure technical excellence in ML projects.

Real-World Use Cases

- Retail: A retail company used few-shot learning with GPT models for generating product descriptions based on minimal input. This cut content production time by 80%.

- Healthcare: ML development services helped fine-tune a BERT model to extract symptoms from unstructured patient records with over 90% accuracy.

- Customer Support Automation: Zero-shot capabilities were employed in chatbots to handle FAQs across multiple domains without retraining.

Challenges in ML Model Training Services

While the evolution of model training—from zero-shot to fine-tuned techniques—offers significant advantages, several challenges still hinder seamless implementation:

- Data Scarcity and Bias: Many industries struggle to gather large, high-quality labeled datasets. Additionally, datasets may contain inherent biases, leading to skewed or unfair model predictions.

- Model Overfitting During Fine-Tuning: Fine-tuned models often perform exceptionally well on training data but may fail to generalize to new or unseen data. This overfitting can compromise reliability in real-world scenarios.

- Lack of Explainability in Zero/Few-Shot Models: Zero-shot and few-shot models—though powerful—are often black boxes. Their decision-making processes can be hard to interpret, which raises concerns in regulated sectors like healthcare and finance.

- Latency and Scalability Constraints: Large models or complex pipelines can introduce latency in production environments. Additionally, scaling these systems for millions of users without sacrificing performance remains a major challenge.

Future Directions in Model Training

As machine learning evolves, several key trends are shaping the future of model training:

- Self-Supervised Learning: Models learn from unlabeled data, reducing the need for costly manual labeling.

- Multimodal Models: Combine text, images, and audio for richer, more versatile AI capabilities.

- RLHF (Reinforcement Learning from Human Feedback): Trains models to align better with human values and safety standards.

- On-Device Fine-Tuning: Enables personalized AI on user devices while preserving privacy and minimizing latency.

Machine learning development services are rapidly adopting these innovations to deliver next-gen, scalable, and ethical AI solutions.

Conclusion

The evolution from zero-shot to fine-tuned models marks a shift in how we train and deploy machine learning systems. Instead of building from scratch, businesses now rely on layered training strategies optimized for speed, cost, and performance.

Professional machine learning development services play a pivotal role in guiding this transition. They help organizations assess feasibility, build reliable pipelines, and ensure that models—whether zero-shot or fine-tuned—deliver real value.

10. FAQs

Q1. When should I choose zero-shot learning?

Zero-shot models are ideal for general tasks or quick experiments where labeled data is not available.

Q2. What’s the difference between fine-tuning and few-shot learning?

Fine-tuning involves retraining a model on substantial domain-specific data, while few-shot learning relies on a handful of examples.

Q3. How do machine learning development services help with model training?

They provide tools, expertise, infrastructure, and automation to build, train, and deploy models efficiently at any stage of the training evolution.

Q4. Are there risks in using pre-trained models?

Yes. Risks include domain mismatch, bias inheritance, and limited explainability—but these can be mitigated through robust model evaluation and testing.

What's Your Reaction?